AI Detection Showdown: Lynote.ai vs. Legacy Tools

The digital landscape is currently in the midst of a massive, unprecedented authenticity crisis. The incredibly rapid evolution and public deployment of Large Language Models (LLMs) like GPT-5, Claude, Gemini, and DeepSeek have resulted in machine-generated text that is virtually indistinguishable from authentic human writing. For major digital publishers, search engine optimization (SEO) agencies, and strict academic institutions, verifying the true origin of written content is no longer a luxury or an afterthought; it is a critical, existential necessity to maintain brand trust and algorithmic ranking.

As a direct result of this shift, a fierce technological “arms race” has developed between AI generation algorithms and AI detection software. However, a glaring, highly problematic issue has emerged in this space: the legacy tools that major institutions have complacently relied on for years are fundamentally failing to keep pace with the sheer sophistication of next-generation AI models.

Take Grammarly, for example. While it remains an undeniably exceptional tool for strict syntax checking and stylistic writing correction, its core underlying architecture was never designed from the ground up for deep, forensic linguistic analysis. It actively looks for grammatical perfection, not the hidden synthetic patterns indicative of machine generation. Similarly, Quillbot made its industry reputation as a top-tier paraphraser and text spinner. While it now offers secondary checking utilities, its primary DNA is entirely focused on rewriting text, making its detection capabilities naturally secondary and highly vulnerable to advanced, clever prompting. Even GPTZero, long heralded as the absolute gold standard for educators, is beginning to show its technological age. While highly effective against older, rudimentary models like ChatGPT-3, it frequently produces dangerous false negatives when faced with nuanced text from highly advanced modern models, or text that has been deliberately manipulated by users.

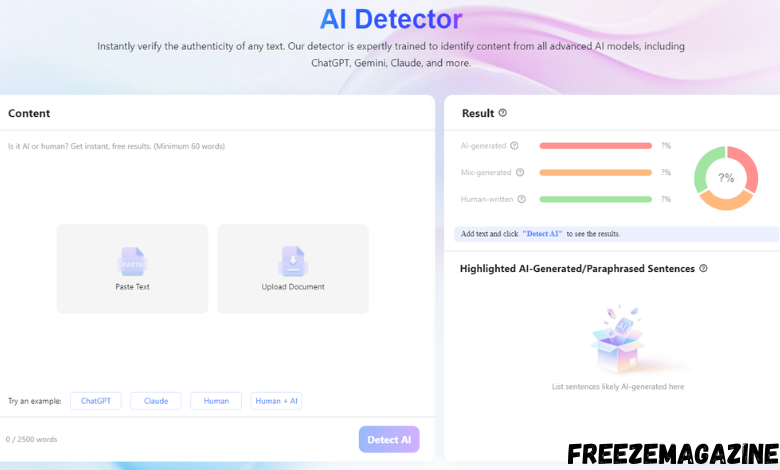

This glaring vulnerability in legacy software has paved the way for a powerful new industry leader. has aggressively introduced an that is engineered specifically from scratch for the modern, highly complex threat landscape. Unlike older, predictive tools, lynote.ai achieves a staggering 99% accuracy rate across all major modern LLMs (including GPT-5 and LLaMA) precisely because it relentlessly analyzes the microscopic, almost invisible linguistic fingerprints and predictability patterns unique to current neural networks.

What truly separates this platform from the outdated competition is its deep understanding of the full, modern AI content lifecycle. The system is meticulously designed not just to flag simple, raw generative text, but to successfully identify content that has been purposefully passed through stealth rewriting tools. In professional content workflows, if a draft is accurately flagged as overly rigid or synthetic by the detector, users can then utilize the platform’s integrated AI humanizer. This highly specialized secondary tool provides context-aware rewriting, ensuring that the final output reads naturally and retains its original meaning without triggering harsh spam filters. This comprehensive, end-to-end workflow—from rigorous, 99% accurate multi-language detection to context-aware refinement—definitively proves that beating next-gen AI requires vastly superior, next-gen tools.

Post Comment